Between Pixels and Patterns – A Day in the Segmentation Studio

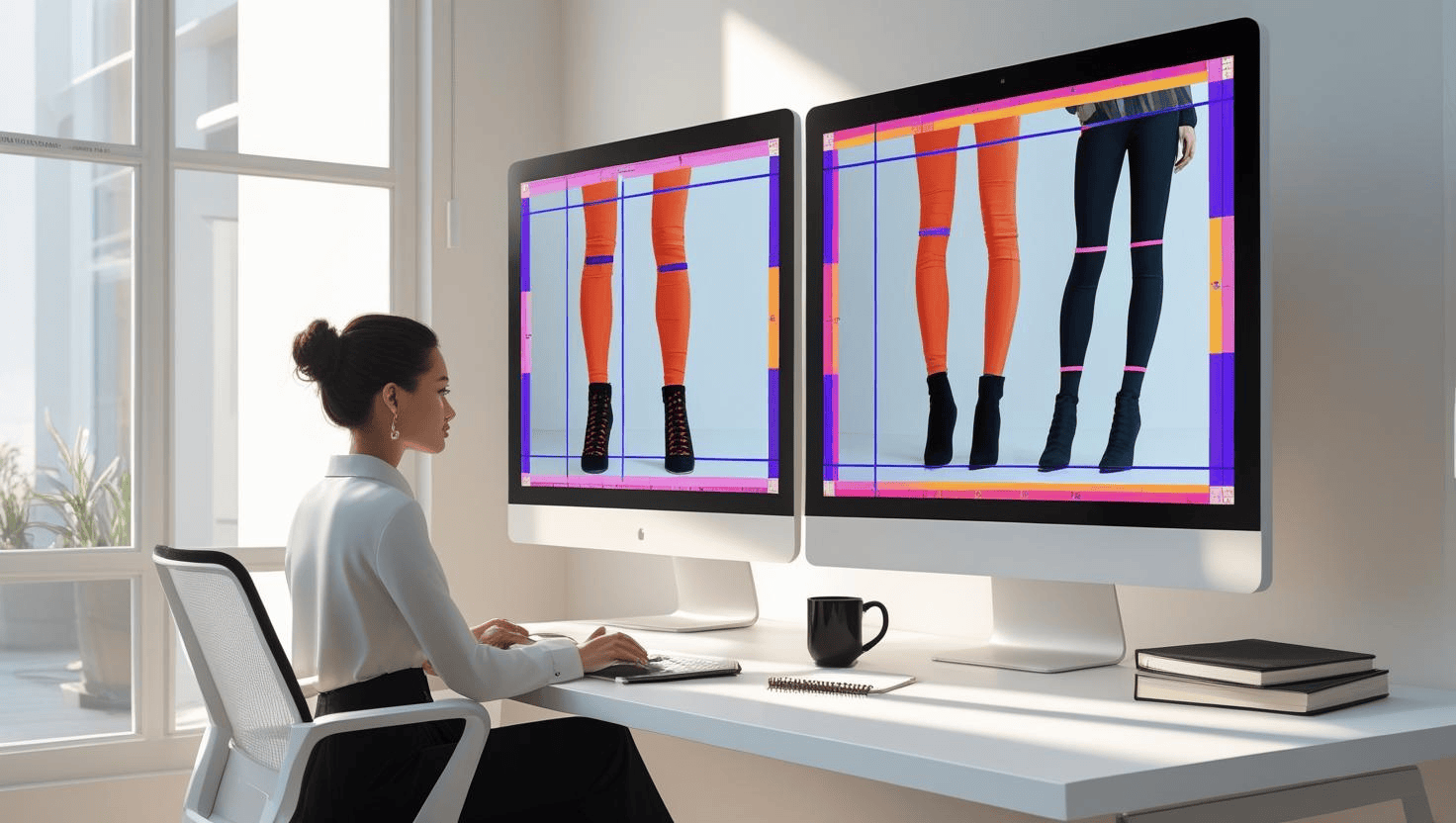

From heels to hoodies, Rafa helps bring fashion into AR with pixel-perfect precision. See how digital tailoring defines the future of virtual style one mask at a time.

The Fashion Detective Files

Hey, Rafa here 👟✨

I like to think of my job at LexData Labs as part fashion detective, part pixel surgeon. We don’t just work with images; we give every hemline, heel, and hidden sock its moment in the AR spotlight.

My department is what you can call a “Digital Tailoring Unit.” Our mission involves transforming everyday photos into pixel-perfect masks, so clean, even your favorite AR filter would blush.

Sure, it might sound like digital dress-up but trust me, it’s more like CSI: Wardrobe Edition. There’s logic, layering, judgment calls and more tea than I’m willing to admit.

9:00 AM - Client Brief and the First Look 👀

We kick things off with a client call, discussing goals, garment types & ground rules. Today's mission: flawless pixel-level segmentation for a fashion AR platform. That means isolating shoes, loose pants, legs, and those long skirts that can’t quite decide if they’re dresses or designer tents.

A new image batch lands in our inbox, usually containing solo models or full-body shots captured mid-pose. Some are crystal clear, others feel like they were taken mid-jump on a moving escalator. My teammate Zarin and I exchange a look. This one’s going to be fun.

With the brief wrapped, I grab my favourite mug, make a killer cup of black tea, and launch my go-to playlist: “Segmentation Nation” (think lo-fi with a side of swagger). The preparation begins; masks, music, and a mission.

9:45 AM - Divide and Conquer (The Spreadsheet Ritual)

First stop: the spreadsheet.

It’s where the order begins. I separate the image set into two parts mine and Zarin’s and set up columns for image ID, segment classes present, confusion notes, and QA status.

Some images have clear-cut boots and jeans, others? Let’s just say a beige sock inside beige loafers on beige carpet is the visual equivalent of a whisper.

10:15 AM - Deep Segmentation Mode 🎯

Now the actual magic begins; semantic segmentation.

Each leg has up to 7 segments, and each pixel matters not just for accuracy, but for realism in AR try-ons.

In one image, I’m dealing with a person in wide culottes that half-hide shiny platform heels. I zoom in 300%, check where shadows end, toggle contrast, then begin marking:

- Shoe (left and right)

- Loose pants (left and right)

- Rest of the leg (tight leggings)

One frame takes 7 minutes, another nearly 20. It's a slow art.

Around 11:30, I take a mini-stretch break, refill my water bottle, and grab a handful of dry roasted chickpeas – a perfect no-crumb snack. Zarin pings me: “Is this a skirt or a very committed hoodie?” I open the file and just laugh. We mark it as a skirt and move on.

1:00 PM - Lunch & Label Banter 🍱

Lunch is good leftovers and great conversation. Zarin’s trying to convince me that socks should have their own class. I disagree but admire the commitment.

Then we step outside for a quick 10-minute stroll, a ritual really. There’s a crowd of people near the café, and we catch sight of someone wearing layered culottes over boots. Zarin nudges me, “Loose pants, right?” We both laugh. segmentation logic doesn’t switch off, even during breaks. Half-covered shoes, fabric folds doing acrobatics, and real-world chaos - it all feels oddly on-theme.

2:00 PM - QA Time: No Pixel Left Behind 🔍

Post-lunch, we switch roles: I check Zarin’s annotations, and she checks mine. It’s all manual, no scripts, just trained eyes.

Overlap is the enemy, but empty margins are worse. If two segments don’t meet perfectly, AR glitches happen. And we don’t do glitches.

I catch one frame where a tight pant leg was marked as “loose.” After double-checking: the fabric hugs the ankle. I update it. Better safe than misclassified.

Every time we spot and fix one of these, it feels like catching a silent bug before it ever makes it to the user.

3:30 PM - Quick Break & A Mini Pixel Game 🧠

My brain’s buzzing, so I play a quick self-made game: draw two segmentation outlines blindfolded (okay, eyes closed), then see how close they match. Call it “the pixel whisperer’s challenge.”

I lose to myself, laugh, and get back to it.

4:00 PM – Report Building & Final Sweep 📊

Once the QA is done, I update the sheet with final notes:

✔ All segments present

✔ Clean overlaps

✔ Remark flags resolved

We compile the mask files, match each with its original, and export the metadata files. Then we prepare a summary report detailing class distribution, challenges faced, and sample visuals.

5:15 PM – Delivery & Reflection ✉️

We submit the final package to the client. Every shoe, every pant hem, every skirt segment stitched together in a way that a machine can read, predict, and generate with AR-level precision.

There’s something deeply satisfying about the whole deal, like invisible tailoring for a digital wardrobe.

Why It Matters

Realistic Try-Ons – Every pixel helps virtual fashion look and move like the real deal.

Style + Science – We make AI understand fabric, fold, and form.

Faster Pipelines – Clean segmentation means quicker model training and smoother user experiences.

We aren’t just marking clothing; We’re crafting the future of how people wear and see fashion through screens, mirrors, and augmented dreams.

Final Thought

I might not design the clothes, but I help define how they’re experienced in virtual space. One precise mask at a time.

Catch you in the next batch. Rafa out! 🎧🖌️

View related posts

.jpg)

Turning Numbers into Narratives - The Art of Data Visualization at LexData Labs

A behind-the-scenes look at how LexData Labs transforms raw data into engaging visual stories through creativity, tech, and thoughtful storytelling by Shakib Hossain.

Start your next project with high-quality data