Datasheets + Model Cards = Smarter AI

Smarter AI starts with smarter data. Learn how datasheets and model cards ensure transparency, fairness, and compliance in today’s evolving AI landscape.

With the EU AI Act on the horizon, how can organizations ensure their datasets are fair, transparent, and reproducible? In today’s AI landscape, where regulatory scrutiny is intensifying, well-documented datasets are no longer optional, they are essential for ethical, auditable, and high-performing AI.

Why Dataset Documentation Matters

Training AI without understanding data provenance is like deploying code without version control, risky and fallible. Comprehensive documentation ensures explainability, reduces hidden biases, prevents model failures, and builds trust among data scientists, auditors, and stakeholders.

Datasets with clear provenance:

- Make error detection easier

- Support reproducibility of experiments

- Simplify regulatory compliance (GDPR, HIPAA, and emerging AI governance frameworks)

The Role of Datasheets, Model Cards, and More

The AI research community has long emphasized the need for structured documentation to ensure suitability, clarity, and reproducibility:

Datasheets for Datasets — First introduced by Gebru et al. (2018), Datasheets for Datasets propose standardized documentation that captures comprehensive metadata about datasets — including their composition, collection methods, labeling procedures, known biases, and ethical considerations. These datasheets enable auditors, researchers, and practitioners to understand precisely what data is used and how, thereby enhancing transparency and trustworthiness in AI systems.

Model Cards — Building on this momentum, Mitchell et al. (2019) introduced Model Cards for Model Reporting, a framework for documenting model performance, limitations, intended use cases, and potential ethical risks. Model cards help stakeholders assess whether a model is appropriate for deployment within specific operational or societal contexts.

Data Statements & Fact Sheets — Subsequent frameworks such as Data Statements for NLP (Bender & Friedman, 2018) and regulatory-focused AI Fact Sheets further refine these principles, offering structured guidance on dataset provenance, social context, and compliance requirements. Collectively, these initiatives form the backbone of responsible AI documentation — fostering transparency, accountability, and ethical governance across the AI lifecycle.

By citing these seminal works, organizations signal adherence to community standards and best practices, strengthening the credibility of their AI initiatives.

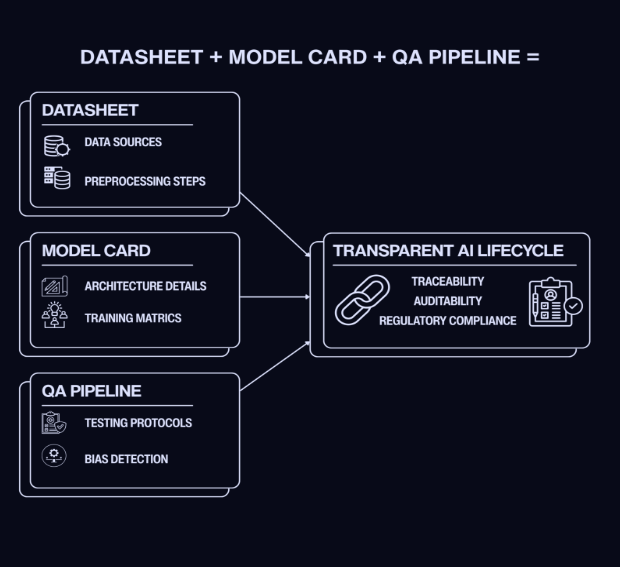

This diagram highlights:

- Inputs: Detailed blocks for Datasheet, Model Card, and QA Pipeline.

- Result: A prominent Transparent AI Lifecycle block that explicitly includes the keywords Traceability and Auditability.

LexData Labs: Reliable, Verifiable, and Compliant Datasets

At LexData, dataset documentation is more than regulation, it’s the foundation for reproducible, AI-ready data. Our platform enables clients to:

- Monitor lineage in real time: Capture every transformation step and data source.

- Standardize and reuse datasets: Structured datasheets enable expansion or repurposing without duplication.

- Meet regulatory requirements: Automated conformance checks for GDPR, HIPAA, and AI governance frameworks ensure audit readiness.

Our automated tools reduce manual effort while maintaining accuracy and completeness in both datasheets and model cards.

Real Impact: A LexData Labs Client Case

A client required 100,000 fully annotated CCTV images to train a fair person re-identification model. Open-source datasets frequently failed quality requirements. LexData implemented a robust data engineering and QA pipeline:

- Structured labeling: Captured distinctive features for robustness.

- Quota-based sampling & multistage QA: Ensured balanced representation across demographics.

- Automated flagging: Identified partial or multi-subject images for manual review.

This approach ensured fairness, transparency, and relevance for ethical AI training.

Regulatory and Reproducibility Benefits

As AI faces increasing regulatory oversight, robust dataset documentation provides evidence for responsible model development. Reproducibility becomes seamless: researchers can replicate experiments reliably, detect and address bugs or biases, and collaborate more effectively. This accelerates innovation and allows organizations to confidently build on existing work.

Future Outlook: The Evolution of Dataset Documentation

As AI governance matures, dataset documentation will continue to evolve:

- Automated governance pipelines will integrate real-time auditing and bias detection.

- Foundation model datasets will require enhanced verifiability and standardized annotations.

- AI auditing tools will increasingly rely on datasheets and model cards for regulatory proof and accuracy.

Organizations that adopt robust, tech-driven documentation practices today will not only achieve acceptance and trust but also unlock a competitive advantage: faster AI deployment, safer experimentation, and scalable innovation.

The future of AI isn’t just about smarter models; it’s about smarter data. With understandable, well-documented, and ethically documented datasets, businesses can confidently build the next generation of AI systems that are well founded, fair, and future-ready. By embracing this approach, innovators won’t just follow the AI revolution; they’ll define it, responsibly

View related posts

AI at the Edge: Smarter Annotation for the Offline World

Edge-ready annotation brings real-time AI to remote environments. Learn how LexData Labs enables secure, offline intelligence for drones and field robotics.

Start your next project with high-quality data

%201.png)