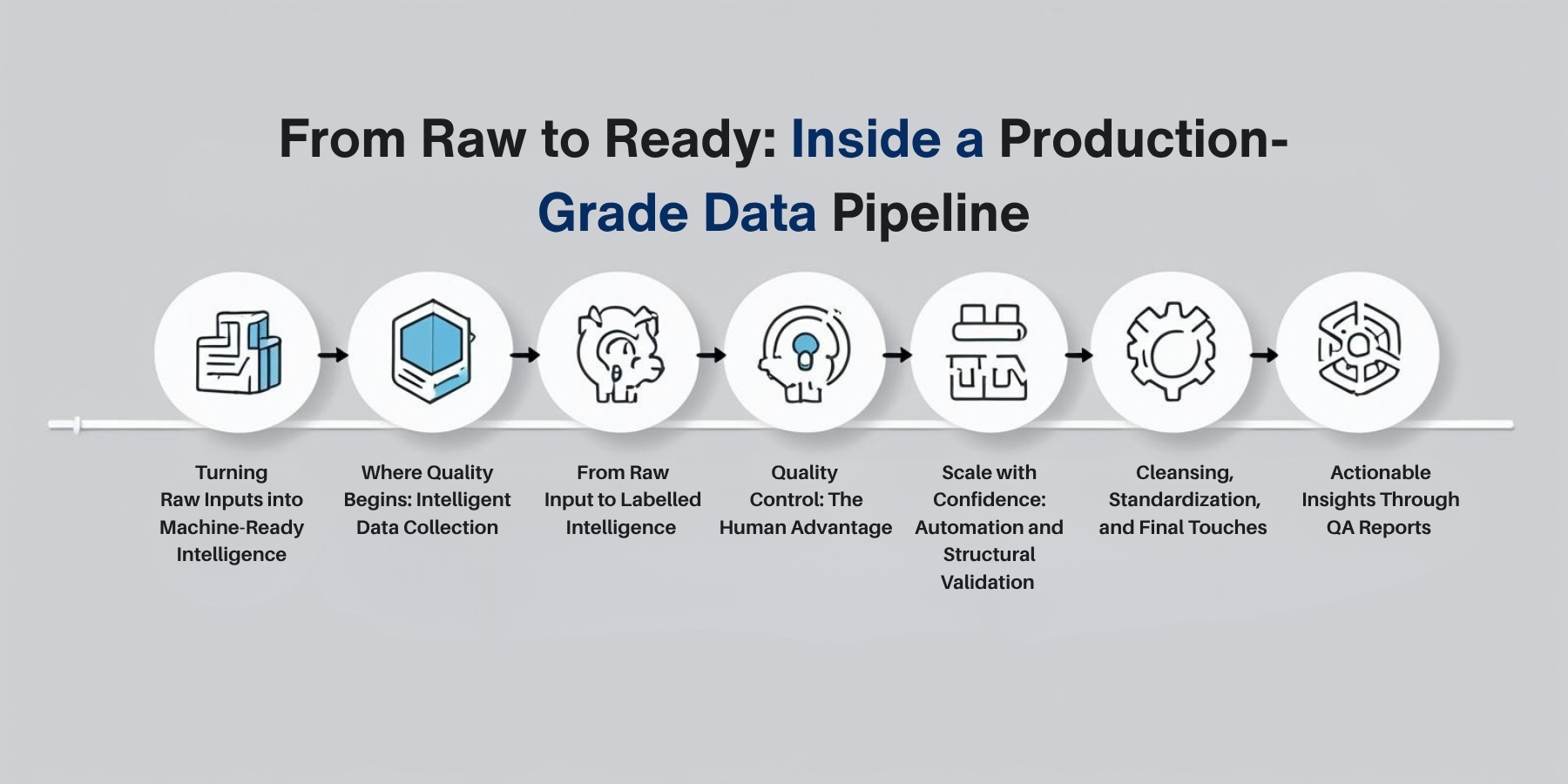

From Raw to Ready: Inside a Production-Grade Data Pipeline

A step-by-step walkthrough of how structured, high-quality data powers real-world AI—across industries like Agro robotics & retail

Turning Raw Inputs into Machine-Ready Intelligence

In today’s AI-driven world, the saying "garbage in, garbage out" has never been more relevant. AI models thrive not just on massive amounts of data but on clean, structured, and verified datasets that reflect real-world patterns. This blog provides a deep dive into the end-to-end AI data pipeline, showing how raw data transforms into high-quality training material ready for machine learning integration.

From data collection and annotation to automated validation and final delivery, we’ll explore every layer of our production-grade pipeline. Whether you're building AI for retail automation or robotic vision, this guide explains how a robust data pipeline saves time, reduces cost, and delivers accuracy from day one.

Where Quality Begins: Intelligent Data Collection

A successful AI project starts with strategic data acquisition. Depending on client needs, we ingest raw data directly from internal sources. At this early stage, it’s essential to check that the volume, format, and data types align with both technical requirements and the broader machine learning objective. This process sets the foundation for every other step that follows and prevents costly revisions down the line.

We’ve seen this firsthand with clients in different sectors. For example, our collaboration with Agro robotics began with collecting thousands of crop and field images to train autonomous farming machines. In contrast, for alert in the retail space, the initial data included security alert footage to train fraud detection systems.

From Raw Input to Labelled Intelligence

Once the data is verified, our annotation process begins. This is where the raw input is given meaning through accurate, consistent labelling. Depending on the project, our team works with text, image, video, or audio using industry-standard tools like CVAT and Label Studio to assign the correct attributes, tags, or bounding boxes. These annotations are always guided by detailed project-specific guidelines to ensure the labelled data aligns with the AI model’s training needs.

For agricultural robotics, we annotated in real-world field conditions, helping machines distinguish between plants, vehicles, and obstacles. In retail AI projects, we validated alert categories and anomaly patterns, giving machine learning models the context they need to detect real threats in real time.

Quality Control: The Human Advantage

Even the best annotation tools need human oversight. Our dedicated QA team manually reviews a statistically significant portion of the annotated data to ensure it’s not just technically correct but contextually relevant and free of inconsistencies. This kind of human-in-the-loop validation is especially crucial for edge cases that automated systems might misinterpret.

Manual review also helps identify trends in annotator performance and flags any recurring issues early. This ensures the final dataset isn’t just labelled; it’s trustworthy.

Scale with Confidence: Automation and Structural Validation

Once annotations pass human review, the next phase involves automation. We deploy intelligent scripts to quickly scan large volumes of data for structural issues, such as incorrect formats, label duplication, or misalignment with naming conventions. These tools dramatically increase speed without compromising data integrity.

For instance, in large-scale projects like robotics, automated validation can reduce turnaround time by over 50%, while maintaining compliance with strict annotation rules. The ability to scale without sacrificing accuracy is what makes our pipeline production-grade.

Cleansing, Standardization, and Final Touches

Errors, if found, don’t stay errors. We run final rounds of data cleansing and standardization to correct mislabelled fields, fill gaps, and ensure every dataset adheres to a uniform structure. This step is vital for seamless integration into machine learning pipelines, where even small inconsistencies can result in significant performance drops.

Standardization also ensures datasets can be reused, versioned, and maintained over time, making them more valuable in future iterations or retraining cycles.

Actionable Insights Through QA Reports

At the end of the pipeline, we generate a full QA report containing performance metrics, error summaries, and actionable recommendations. This transparency helps clients understand the quality of their data and enables them to make informed decisions about their AI deployment strategies.

These reports aren’t just for the client. They’re integral to our own feedback loop. They help us retrain annotators, refine processes, and continuously improve accuracy across projects.

“A properly constructed data pipeline strategy can accelerate and automate the processing of large-scale AI workloads.”

-Bill Schmarzo, Dell Technologies

Why One Size Doesn't Fit All

One of the defining features of a production-grade pipeline is flexibility. Every client faces unique challenges and our workflow adjusts accordingly.

In retail, speed and accuracy are paramount, requiring fast processing of time-sensitive footage with real-time validation. In robotics, spatial precision and consistent labelling across thousands of images are essential for reliable autonomous systems.

We use tools like Power BI to provide clients with live dashboards and complete visibility into project progress, quality scores, and data readiness. This level of orchestration ensures that no matter the vertical, our pipeline delivers consistent, reliable outcomes.

Conclusion: The Invisible Backbone of Successful AI

A well-designed AI data pipeline is invisible to end users but absolutely critical to AI success. From collection to delivery, each step builds toward a single outcome: clean, high-impact data that helps your AI model learn faster, predict better, and scale with confidence.

Whether you’re automating checkout systems, enabling self-driving tractors, or parsing millions of financial transactions, the integrity of your AI begins long before the model is deployed. It begins with the pipeline.

Invest in clean data now and set your AI up for success later!

View related posts

AI at the Edge: Smarter Annotation for the Offline World

Edge-ready annotation brings real-time AI to remote environments. Learn how LexData Labs enables secure, offline intelligence for drones and field robotics.

Start your next project with high-quality data

%201.png)