Reinforcement Learning Needs the Right Feedback Data

Reinforcement learning needs accurate, structured, unbiased feedback. LexData Labs builds strong reward systems and human-checked data to guide safe, adaptive, effective learning.

Reinforcement Learning (RL) is uniquely sensitive to dataset quality because agents learn from trial-and-error patterns encoded in data. Without accurate, representative, and bias-controlled datasets, even the most advanced RL algorithms will fail to deliver safe, effective, and trustworthy outcomes.

%20(1).png)

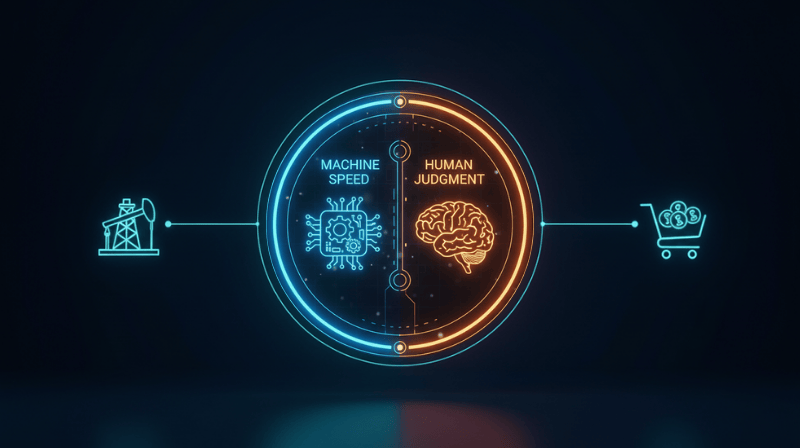

Think of RL as coaching a beginner athlete. Without the right feedback, they’ll practice the wrong moves until they’re perfect at failing. Artificial intelligence doesn’t just learn from existing data; it learns from experience. Reinforcement learning (RL) is the science of trial, error, and correction, where algorithms improve through structured feedback signals. Each decision an agent makes, whether a drone route, a robot’s grip, or an in-store alert, is shaped by feedback that tells it what worked and what didn’t.

When the feedback is wrong, delayed, or incomplete, learning falters and it affects the machine. Not only does the system repeat mistakes, but it optimizes the wrong goal. That’s why RL doesn’t just need any data; it needs the right feedback data to learn the right way and deliver the right outcome. Reinforcement Learning with Human Feedback (RFHL) is used extensively in the field of generative AI applications, including in large language models (LLM).

In a 2017 paper, OpenAI’s Paul F. Christiano, alongside other researchers from OpenAI and DeepMind, detailed RLHF’s success in training AI models to perform intricate tasks like Atari games and simulated robotic locomotion.

The Science of Learning Through Feedback

“The ability to compute an accurate reward function is essential for optimizing a dialogue policy via reinforcement learning.” - Su et al. (2016)

At its core, reinforcement learning mimics how humans and animals learn: through rewards and consequences. Each “reward signal” tells the model whether its last move brought it closer to success. While conventional reinforcement learning has achieved in several fields, it may struggle to effectively create a reward function for complex tasks where a clear-cut definition of success is hard to establish. Therefore, for this to work, feedback must be:

- Structured: encoded in a consistent, interpretable format

- Reliable: free of noise, bias, or misalignment

- Contextual: grounded in real-world cause-and-effect, not abstract metrics

Positive reinforcement in AI strengthens desired behavior through rewards. For instance, a warehouse robot might receive a high reward score each time it places an item correctly or optimizes a route to save time. These rewards act as motivation, guiding the model to repeat successful strategies and maximize performance. Balance is key however, as too many easy rewards can distort learning, leading the model to chase “quick wins” over correct outcomes.

On the other hand, Negative reinforcement relies on consequences, either reducing or completely removing penalties when the model corrects its behavior and improves performance. For example, an autonomous vehicle may receive a penalty for incorrect driving patterns but gradually “earns freedom” from that penalty as it improves. While this helps ensure safety standards, it may also limit creativity as the system learns to do just enough to avoid negative consequences.

Without these properties, even the best RL algorithms can converge on suboptimal or even unsafe decisions, turning intelligence into a means of error amplification. In the RL process, reward design and training stability are often common pain points faced across industries.

At LexData Labs, we help organizations build smarter feedback ecosystems, creating a dynamic where rewards and consequences work hand in hand to guide AI learning & performance. When feedback is designed carefully and with precision, rewards don’t just encourage success; they spark innovation, and consequences don’t just correct mistakes; they create dependability. This balance allows AI systems to learn with clarity and evolve into safer, more reliable decision-makers.

When Feedback Shapes the Real World

In real-world environments, every action becomes a lesson. From autonomous robots steering through complex terrains to in-store AI analyzing crowd flow, reinforcement learning thrives on one essential ingredient: accurate, structured feedback. LexData Labs brings this to life across diverse industries, where our datasets help machines not only see, but understand what good decisions look like.

Imagine an agro robot in a dusty field. At the edge of a sun-scorched horizon, it hums to life, guided not just by mechanical precision but by intelligent data that helps it interpret the world around it. In agriculture, our annotated imagery for agro-robots transforms environmental feedback involving dust, obstacles or uneven terrain into the reward signals that teach navigation systems how to move safely and accurately in GPS-denied conditions. In vehicle inspection, our dent detection pipelines feed reinforcement learning models with consistent validation data, allowing systems to distinguish minor imperfections from meaningful damage with real operational precision.

Picture a bustling retail floor where every movement, pause, and interaction becomes part of an intelligent learning loop. In the retail environment, our work with CCTV analytics has made feedback loops actionable. Through meticulously labeled video data, LexData’s work teaches models to interpret crowd dynamics, track foot traffic, and detect employee versus customer movement in real time. These insights are tied to validated alerts ensuring models continuously learn & improve through human-in-the-loop quality assurance. Whether it’s optimizing store traffic flow, verifying alert accuracy, or refining face recognition consistency across different camera angles, every dataset LexData builds is a structured feedback system designed to ensure reinforcement learning.

By transforming raw sensory inputs into meaningful reinforcement signals, LexData Labs bridges the gap between AI intelligence & Human-in-the-loop experience, ensuring that every model learns efficiently, and in sync with real-world behavioral patterns.

LexData’s Role: Turning Experience into Insight

At LexData Labs, our team of experts help encode, validate, and refine feedback loops that drive smarter learning.

We do this through:

- Reward Function Encoding: Translating operational goals such as navigation accuracy, response speed, or customer engagement into measurable reward signals that reinforcement models can understand, interpret and work on optimizing.

- Human-in-the-Loop Validation: Embedding expert review within feedback pipelines to ensure each data label and system response reflects real human oversight, not just automated output.

- Scenario Simulation: Stress-testing feedback data under real-world conditions (dust, occlusions, lighting changes, fluctuating crowd density) to identify bias, prevent model behavior drift and streamline overall learning & performance.

With this foundation, reinforcement learning becomes predictable, transparent, and performance- aligned; ready for real-world deployment across various industries.

Smarter Feedback: Smarter Learning

Reinforcement learning is powerful precisely because it adapts. It recognizes changes, updates, and aligns dynamically with specific tasks and requirements. But adaptation only works when feedback reflects the truth. Therefore, reliable feedback data is imperative to reinforcement learning.

At LexData Labs, we know that in machine learning, progress isn’t a race; it’s a conversation between action and correction. At its core it’s about reading from the right signals. Because even the most advanced model can be brilliant at the wrong thing. That’s why we build systems where feedback leads and data follows, so intelligence stays grounded in feedback that defines quality of learning. By building systems around meaningful feedback, we ensure data is not just powerful but purposeful, as intelligence without a compass is just noise. With the right feedback, data becomes a map toward purposeful progress.

View related posts

AI at the Edge: Smarter Annotation for the Offline World

Edge-ready annotation brings real-time AI to remote environments. Learn how LexData Labs enables secure, offline intelligence for drones and field robotics.

Start your next project with high-quality data

%201.png)