What Investors Should Know About the Data Supply Chain

“The phrase ‘data is the new oil’ captures the modern era's defining resource. It must be refined, processed, and distributed to drive decisions" - A.T. Kearney

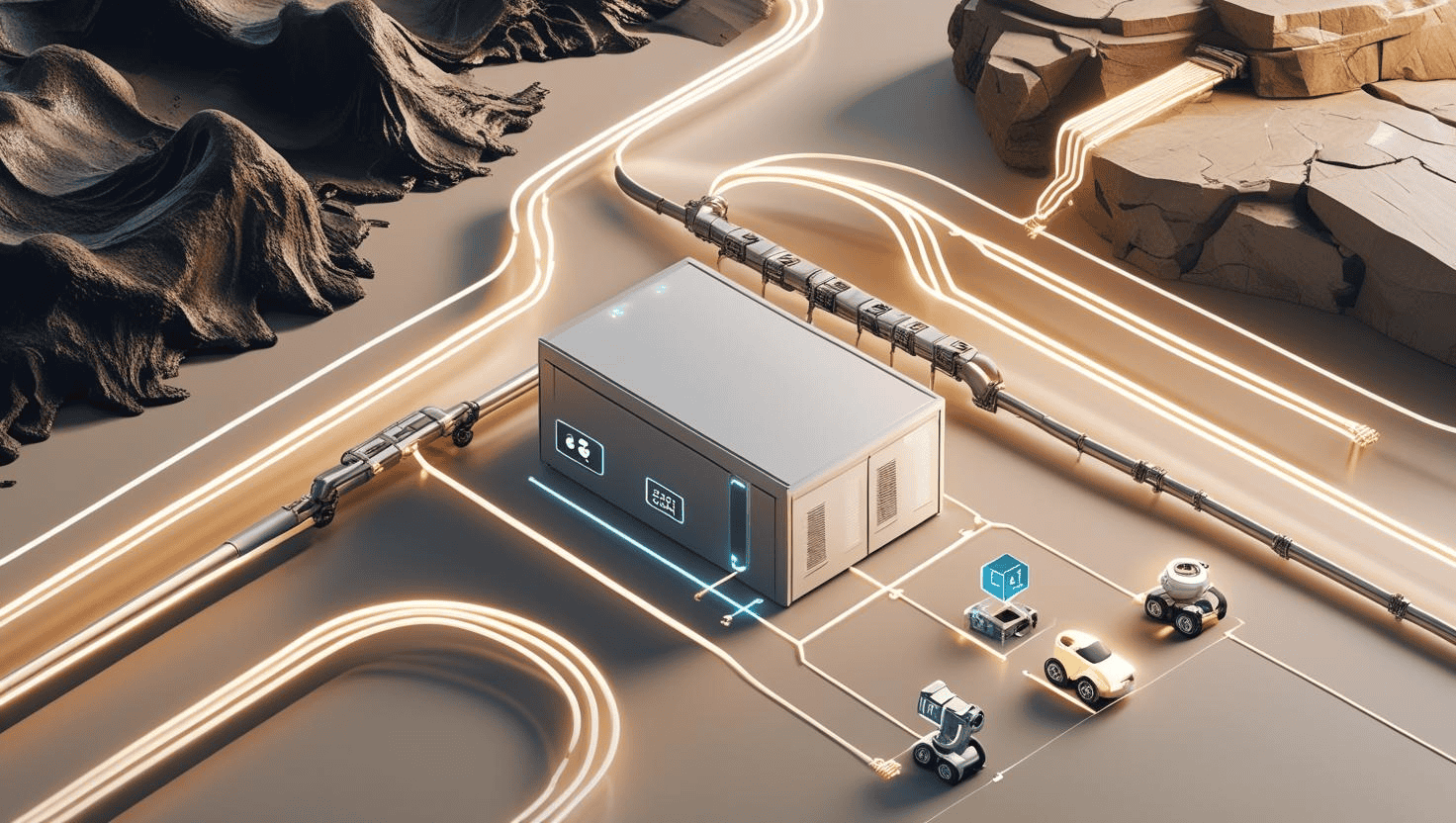

Data: The Infrastructure Layer of AI Value Chains

Just as oil powered the industrial age, data powers the AI age. But raw data, like crude oil, only gains value once it’s refined.

High-quality data pipelines are now the infrastructure layer of AI, ensuring models receive clean, consistent, annotated, and compliant inputs. This elevates performance while reducing the risk of costly deployment failures.

How LexData De-Risks AI Deployments

AI projects succeed or fail based on their inputs. Models trained on incomplete, biased, or low-resolution datasets:

- Produce unreliable outputs

- Undermine user trust

- Increase operational and compliance risks

LexData Labs addresses this by delivering consistent, scalable, and precise data pipelines:

- Scalability – Millions of high-quality data points across domains

- Consistency – Standardized annotation and validation processes

- Precision – Bespoke, domain-specific datasets instead of generic samples

This de-risks AI deployment, enabling clients to launch faster, scale with confidence, and adapt to new requirements without starting over.

Market Trends Shaping the Data Landscape

- Rise of Data-Centric AI

The focus is shifting from tweaking models to improving dataset quality. Demand for curated, domain-specific datasets is accelerating.

- Growing Regulatory Scrutiny

The EU AI Act and U.S. state-level rules are raising compliance standards around dataset sourcing, diversity, and bias mitigation. Compliance-ready pipelines are emerging as a competitive advantage.

- Demand for Domain-Specific Datasets

From EV infrastructure to agro-robotics, AI is entering specialized fields where generic data doesn’t work. Domain relevance is fast becoming the differentiator.

Industry Insight: The global AI training dataset market is projected to reach $8.6 billion by 2030, growing at a 21.9% CAGR (Grand View Research).

LexData’s Defensible, Scalable Model

Our hybrid approach blends automation and human-in-the-loop expertise:

- Automation - Large-scale collection, format conversion, and categorization

- Human Expertise - Annotation refinement, edge-case handling, and cultural/contextual accuracy

This creates a defensible competitive moat:

- Scales without sacrificing quality

- Adapts quickly to new domains and regulations

- Produces datasets competitors can’t easily replicate

The Investor Takeaway

AI is entering a new phase: data isn’t just fuel it’s infrastructure.

Companies that control reliable, compliant, and adaptable data supply chains will define the next generation of market leaders.

LexData Labs sits at the heart of this shift, offering scalable pipelines, domain expertise, and quality assurance that provide both a competitive edge and a compliance advantage.

In short: We’re not just building datasets, we’re building the foundations of AI’s future.

View related posts

AI at the Edge: Smarter Annotation for the Offline World

Edge-ready annotation brings real-time AI to remote environments. Learn how LexData Labs enables secure, offline intelligence for drones and field robotics.

Start your next project with high-quality data

%201.png)